Google hacking

|

Read other articles:

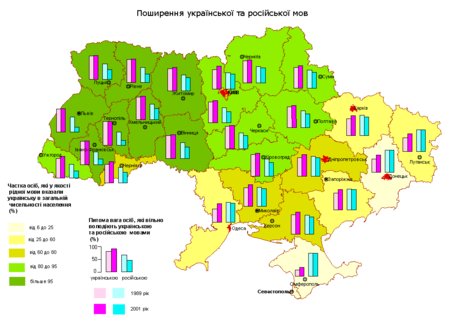

Языки Украины Жёлтый — украинский языкСветло-зелёный, голубой — относительно преобладает украинский Тёмно-зелёный — абсолютно преобладает русский язык Официальные украинский Языки меньшинств армянский, белорусский, болгарский, венгерский, гагаузский, идиш, караимски�…

Artikel ini sebatang kara, artinya tidak ada artikel lain yang memiliki pranala balik ke halaman ini.Bantulah menambah pranala ke artikel ini dari artikel yang berhubungan atau coba peralatan pencari pranala.Tag ini diberikan pada Januari 2023. Ini adalah daftar ilmuwan yang beragama Orang Kurdi. Caban al-Kurdi Abu Hanifa Dinaweri Abdussamad Babek Salahuddin Ayyubi Cakir al-Kurdi Ali İbn el-Asir Abdul Qadir Jaelani Macid al-Kurdi Al-Jazari Fahrul Nisa Abul Fida[1] Mala Ciziri Mala Batey…

Artikel ini sebatang kara, artinya tidak ada artikel lain yang memiliki pranala balik ke halaman ini.Bantulah menambah pranala ke artikel ini dari artikel yang berhubungan atau coba peralatan pencari pranala.Tag ini diberikan pada Oktober 2022. Benteng Kota Piring adalah benteng yang terletak di Kampung Kota Piring, Kelurahan Kota Piring, Kecamatan Tanjungpinang Timur, Kota Tanjungpinang, Provinsi Kepulauan Riau, Indonesia.[1] Benteng Kota Piring merupakan benteng pertahanan, tempat ting…

Israeli executive Dorit DorDor in 2019CitizenshipIsraeliAlma materTel Aviv University (BSc)Tel Aviv University (M. Sc.)Tel Aviv University (Ph.D in computer science)OccupationChief technology officerEmployerCheck PointSpouseTomer Dor Dorit Dor (Hebrew: דורית דור), (born February 5, 1967, in Haifa) is an Israeli executive, computer scientist, Chief technology officer of Check Point Software Technologies Ltd. and Israel Defense Prize winner. Biography Dorit Dor was born to Shaya Dolin…

Рочдейлский каналангл. Rochdale Canal Расположение Страна Великобритания РегионАнглия Характеристика Длина канала51 км Дата постройки1804 Шлюзов91 Габариты Габаритная ширина4,3 м Водоток ГоловаКолдер 53°42′36″ с. ш. 1°54′04″ з. д.HGЯOУстье · Мест…

Food produced by bacterial fermentation of milk For other uses, see Yogurt (disambiguation). YogurtA plate of yogurtTypeFermented dairy productServing temperatureChilledMain ingredientsMilk, bacteria Media: Yogurt Yogurt (UK: /ˈjɒɡərt/; US: /ˈjoʊɡərt/,[1] from Ottoman Turkish: یوغورت, romanized: yoğurt;[a] also spelled yoghurt, yogourt or yoghourt) is a food produced by bacterial fermentation of milk.[2] Fermentation of sugars in the milk by …

Voce principale: Associazione Sportiva Lucchese Libertas 1905. Associazione Sportiva Lucchese LibertasStagione 2006-2007Sport calcio Squadra Lucchese Allenatore Fulvio Pea (1ª-20ª) Paolo Stringara (21ª-34ª) Presidente Ahmad Fouzi Hadj Serie C18º nel girone A Coppa Italiaprimo turno Coppa Italia Serie Csemifinale Maggiori presenzeCampionato: Brunner (32) Miglior marcatoreCampionato: Carruezzo (11) StadioPorta Elisa 2005-2006 2007-2008 Si invita a seguire il modello di voce Questa pagina…

Emil Fischer Nama dalam bahasa asli(de) Hermann Emil Fischer BiografiKelahiran9 Oktober 1852 Euskirchen Kematian15 Juli 1919 (66 tahun)Berlin (Jerman) Tempat pemakamanFriedhof Wannsee, Lindenstraße Galat: Kedua parameter tahun harus terisi! Data pribadiPendidikanUniversitas Bonn University of Strasbourg KegiatanPenasihat doktoralJohann Friedrich Wilhelm Adolf von Baeyer SpesialisasiKimia Pekerjaanbiochemist, kimiawan, dosen Bekerja diUniversitas Ludwig Maximilian Munich University of Er…

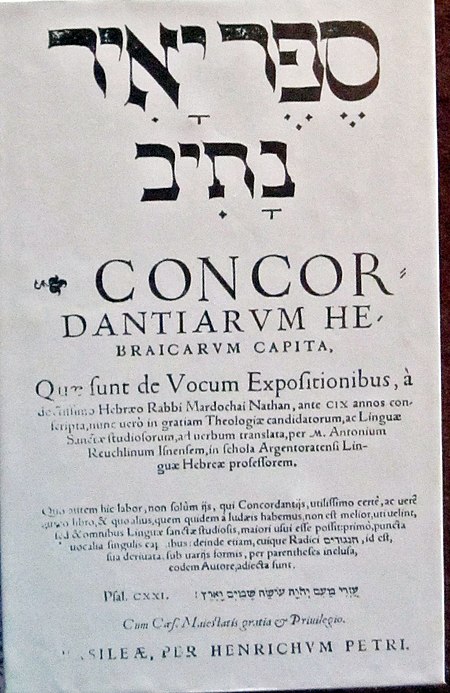

List of words or terms in a published book This article's lead section contains information that is not included elsewhere in the article. If the information is appropriate for the lead of the article, this information should also be included in the body of the article. (August 2019) (Learn how and when to remove this template message) A concordance is an alphabetical list of the principal words used in a book or body of work, listing every instance of each word with its immediate context. Histo…

North American F-100 Super Sabre menjatuhkan napalm dalam sebuah latihan. Napalm adalah campuran pembakar dari bahan pembentuk gel dan petrokimia yang mudah menguap (biasanya bensin atau bahan bakar diesel). Nama napalm berasal dari gabungan dua konstituen berupa bahan pengental dan pembentuk gel: garam aluminium yang diendapkan dari asam naftena dan asam palmitat.[1] Napalm B adalah versi yang lebih modern dari napalm (memanfaatkan turunan polistirena) dan, meskipun sangat berbeda dalam…

American college football game that took place in Michigan in 2007 College football game2007 Appalachian State vs. Michigan Appalachian State Mountaineers Michigan Wolverines (0–0) (0–0) 34 32 Head coach: Jerry Moore Head coach: Lloyd Carr APCoaches 55 1234 Total Appalachian State 72133 34 Michigan 14396 32 DateSeptember 1, 2007[1]Season2007StadiumMichigan Stadium[1]LocationAnn Arbor, Michigan[1]FavoriteMichigan (no betting line)[2]RefereeJ…

Roman Catholic diocese in Italy Not to be confused with Roman Catholic Diocese of Massa Carrara-Pontremoli. Diocese of Massa Marittima-PiombinoDioecesis Massana-PlumbinensisMassa Marittima CathedralLocationCountryItalyEcclesiastical provinceSiena-Colle di Val d'Elsa-MontalcinoStatisticsArea1,200 km2 (460 sq mi)Population- Total- Catholics(as of 2021)126,700 (est.)124,750 (guess)Parishes53InformationDenominationCatholic ChurchRiteRoman RiteEstablished5th centuryCathedralB…

Subdivision of Kherson Oblast, Ukraine Raion in Kherson Oblast, UkraineSkadovskyi Raion Скадовський районRaion FlagCoat of armsCoordinates: 46°10′30.8706″N 32°53′11.4174″E / 46.175241833°N 32.886504833°E / 46.175241833; 32.886504833Country UkraineOblast Kherson OblastEstablished1923Admin. centerSkadovskSubdivisions9 hromadasGovernment • GovernorYegor UstynovArea[1] • Total5,255 km2 (2,029 sq&#…

Susur pesisir dekat Porthclais, Pembrokeshire Susur pesisir adalah pergerakan di sepanjang zona intertidal[1] dari garis pantai berbatu dengan berjalan kaki atau dengan berenang, tanpa bantuan perahu, papan selancar, atau kerajinan lainnya. Susur pesisir memungkinkan seseorang untuk bergerak di zona benturan antara badan air dan pantai tempat gelombang, pasang surut, angin, bebatuan, tebing, selokan, dan gua berkumpul. Istilah susur pesisir dalam Bahasa Inggris yaitu coasteering dicetusk…

REG3G المعرفات الأسماء المستعارة REG3G, LPPM429, PAP IB, PAP-1B, PAP1B, PAPIB, REG III, REG-III, UNQ429, regenerating family member 3 gamma معرفات خارجية الوراثة المندلية البشرية عبر الإنترنت 609933 MGI: MGI:109406 HomoloGene: 128216 GeneCards: 130120 علم الوجود الجيني الوظيفة الجزيئية • carbohydrate binding• peptidoglycan binding• oligosaccharide binding• transmembrane signaling receptor a…

Regional dish of Syracuse, New York Salt potatoesCooking salt potatoesCourseSide dishPlace of originUnited StatesRegion or stateNortheastServing temperatureHotMain ingredients Bite-size young white potatoes Salt Melted butter Salt potatoes are a regional dish of Syracuse, New York, typically served in the summer when the young potatoes are first harvested. They are a staple food at fairs and barbecues in the Central New York region, where they are most popular. Potatoes specifically intended for…

Chiesa di San SistoFacciataStato Italia RegioneEmilia-Romagna LocalitàPiacenza IndirizzoVia San Sisto 9 b Coordinate45°03′26.46″N 9°41′35.38″E / 45.057349°N 9.693161°E45.057349; 9.693161Coordinate: 45°03′26.46″N 9°41′35.38″E / 45.057349°N 9.693161°E45.057349; 9.693161 Religionecattolica Diocesi Piacenza-Bobbio Stile architettonicorinascimentale Inizio costruzione1490 Completamento1511 Sito webSito diocesano Modifica dati su Wikidata&#…

Si ce bandeau n'est plus pertinent, retirez-le. Cliquez ici pour en savoir plus. Cet article ne cite pas suffisamment ses sources (mai 2017). Si vous disposez d'ouvrages ou d'articles de référence ou si vous connaissez des sites web de qualité traitant du thème abordé ici, merci de compléter l'article en donnant les références utiles à sa vérifiabilité et en les liant à la section « Notes et références ». En pratique : Quelles sources sont attendues ? Comment …

Salib Maria Logo Kerasulan Paus Yohanes Paulus II Salib Maria adalah sebuah nama informal yang diberikan pada sebuah rancangan salib Katolik Roma. Salib tersebut terdiri atas sebuah salib Latin tradisional dengan palangnya melintang hingga ke ujung kanan, dan sebuah huruf M (untuk Maria) di bagian kanan bawahnya. Tampilan terkenal Salib Maria yang pertama adalah pada gambar logo kerasulan Paus Yohanes Paulus II, dipajang dengan jelas di peti matinya pada saat upacara pemakamannya (Sri Paus terke…

يفتقر محتوى هذه المقالة إلى الاستشهاد بمصادر. فضلاً، ساهم في تطوير هذه المقالة من خلال إضافة مصادر موثوق بها. أي معلومات غير موثقة يمكن التشكيك بها وإزالتها. (فبراير 2016) القضاء في موريتانيا يرجع أول تنظيم قضائي موريتاني إلي القانون رقم 012 الصادر بتاريخ 27 يونيو 1971م، وقد تميز هذ…